This is part two of a series detailing the steps required to run Kubeapps on a VMware TKG management cluster (on AWS) configured to allow users to deploy applications to multiple workload clusters, using the new multicluster support in Kubeapps. Though details will differ, a similar configuration works on other non-TKG multicluster setups as well.

The first post described setting up your VMware TKG management cluster with two OpenIDConnect-enabled workload clusters. This post assumes you have your TKG environment setup as described there and focuses on the Kubeapps installation and configuration. The following video demos the result:

Prepare a Kubeapps configuration

We’ll need two random values for the client and cookie secret required as part of Kubeapps’ oauth2-proxy configuration, so generate these values with:

|

|

Next, create a kubeapps-tkg-values.yaml file with the following contents, substituting in the same values from earlier steps:

|

|

As noted in the comments there, the most important part for the authProxy is the --scope argument which requests not only the normal openid email groups scopes but also requests that the workload client ids be included in the audience of our kubeapps-oauth2-proxy token. More about this below.

For the apiServiceURL and certificateAuthorityData values for Kubeapps’ clusters configuration, you have this data all in your kubeconfig, so grab it from there:

|

|

With the kube-context set for the management cluster, we create a namespace for Kubeapps and install away!

|

|

Once the pods are all up and running, check for the frontend loadbalancer domain name given by AWS:

|

|

and open it up in a browser to verify that you can see kubeapps at that address. Note that logging in wil result in a bad request until we update Dex’s configuration.

Configuring a Kubeapps client for Dex

The last step is to edit the Dex config adding a kubeapps-oauth2-proxy client and importantly, ensuring that Dex views the kubeapps-oauth2-proxy client as a trusted peer of each cluster client. This allows us to request that the cluster client-ids be included in the audience of the returned token for the kubeapps-oauth2-proxy, which in turn enables the API servers of each cluster to trust a token issued to the kubeapps-oauth2-proxy client.

So we edit the same Dex configuration file which we previously worked with while setting up the authentication for our TKG clusters:

|

|

and add a second static client for the second workload cluster (the name must match the name of your second workload cluster, but you don’t need any other secret or redirect URIs since we didn’t install Gangway on the second workload cluster) and a third static client for the kubeapps-oauth2-proxy. Note that the client entry for both clusters are listed as trusted peers of the kubeapps-oauth2-proxy client:

|

|

While editing this file, if you’re using Google as your upstream identity provider, I recommend making one further change to ensure dex only allows authentication for emails from a specific domain. In the config section of the oidc connector, uncomment and set the following to match the email domains which you want to allow, for example:

|

|

Bounce dex by deleting the dex pod after applying the config:

|

|

With this, Dex will successfully authenticate, but Kubeapps still won’t let you in because your user doesn’t yet have access to the cluster. So switch to the first workload cluster and create the RBAC to give yourself cluster-admin:

|

|

and then repeat after switching context to your second workload cluster.

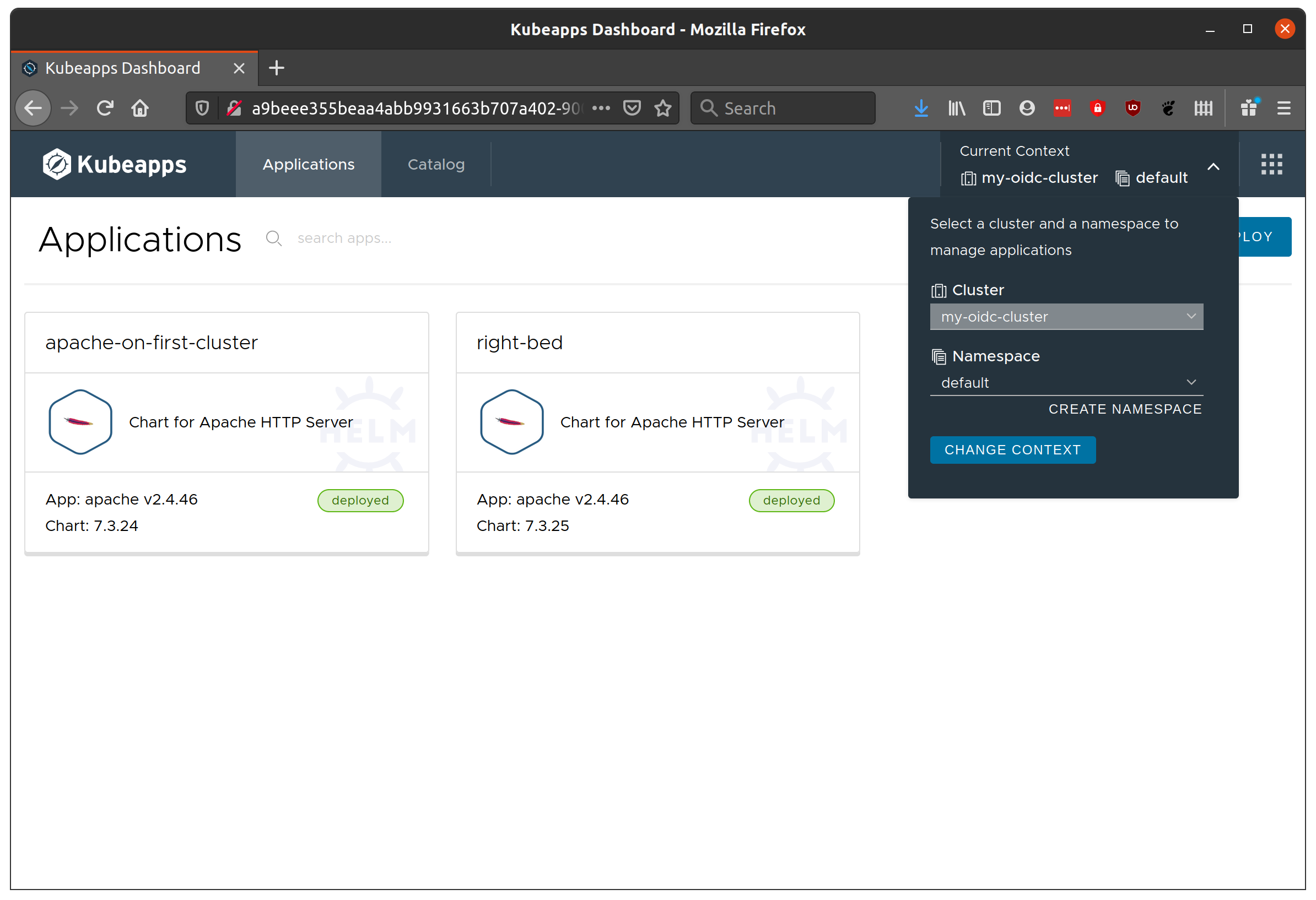

When you now login to Kubeapps, you should be presented with the UI including the option to switch between your clusters!

Well done! Of course, there are many points for errors which would require debugging here. If there’s a need, I’ll follow up with a common issues and how to debug.